AI Risks: what should you actually be concerned about?

TL;DR - You should be concerned about inequality, not unemployment.

A lot of ink has been spilled on AI safety since I started writing on the topic in 2018. In this post, we’ll go over the main categories of AI risks, and sort out the real concerns from the headline-grabbing nonsense.

There are 3 main branches of AI risk:

-

Existential risk from “misaligned AI”

-

Automation creating large scale disruptions in the labor market.

We’ve talked about automation’s effects on the job market on here before, and the takeaways have not changed since[1]. So let’s focus on the first two.

What about “Algorithm Bias”?

Algorithm bias is a term for an algorithm creating systematically “unfair” outcomes. Calling this behavior “bias” will confuse those who studied statistics, because there bias means your model is innacurate.

You can have an accurate model that is a “biased algorithm”. Statisticians were not the ones coming up with the term “algorithm bias”.

It might be better to think of algorithm bias as an algorithm working in a way you don’t like. This might be a valid concern, depending on the claim.

You’ll never get something you like if you train it on the internet

Take a model like glove-twitter-50. This is a good old word embedding model [footnote]LLMs from the 2013 era for you zoomers[/footnote] trained on a dataset of tweets.

If you do a minimal amount of analysis, you’ll notice models like this have racial prejudice, among other issues. Words associated with some ethnic groups are more correlated with negative adjectives than others, for instance.

This does not mean the GLoVe algorithm is innacurate! People are shitty. The algorithm accurately represents that. Glove-twitter-50 is biased in the political sense, but not in the statistical sense.

Bias is the gap between what is and what we want

A “raw” LLM trained on roughly the whole internet will exhibit a long list of behaviors we would rather not see. The raw LLM is doing its job, though. It outputs what the internet is most likely to write next given an input prompt.

The problem is in the heads of the humans reading the LLM output.

This is not necessarily a bad or naive thing. It’s OK to aspire for the world to be a better place! But if you claim an algorithm is problematic, the burden is on you to define what exactly is wrong in a raw LLM and an example of the same model passing your test.

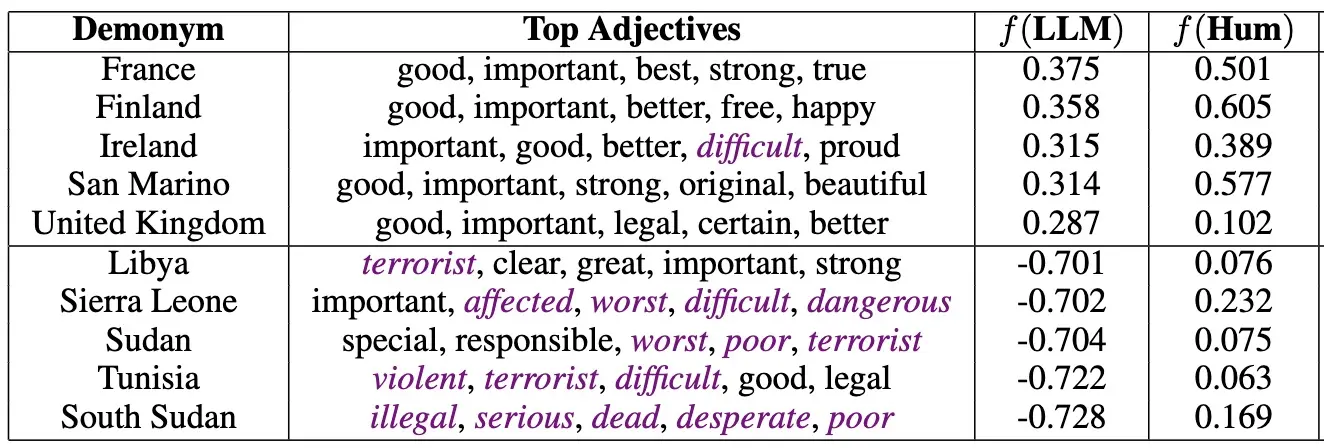

If you say a LLM is propagating negative views of the middle east, you should define some behavior where it would exhibit no racism so that a fix can be properly provided. Take this paper for example:

They find that some negative adjectives are more correlated with some countries than others, they think this is a thing that should be fixed, and propose a method to fix it and a way to measure that the fix works. This is perfectly fine work!

As you might see, “algorithm bias” is well trod ground in 2023. We have a lot of research on the topic. We even have benchmark suites on social biases and toxicity to test models.

Bias isn’t even the biggest issue with algorithm bias

Models presenting sociological bias is just one example failure case of “bad initial data”. Another example would be predictive policing algorithms that propagate “unlawful and biased police practices”. It does so because police work “unlawful and biased practices” and this behavior is used as example data to train the model.

If we define “algorithm bias” as “creating undesirable outcomes”, then the biggest issues with machine learning systems are really engineering issues. Researchers go to great lengths to create models that correct biases, and people keep using the default models, because they don’t care.

There’s a long list of other ways algorithms harm people because of careless deployment: data feedback loops, improper consideration of exploration/exploitation tradeoffs, “throw the model into production” mentality leading to stale models, optimization objective side effects[footnote]I’m not calling this “misalignment” for reasons that will be clear[/footnote], etc. These are valid concerns, but discussions for future posts.

Takeaway: Algorithm bias is a valid concern, but too vague. It can encompass almost any problematic behavior coming from a machine learning system. If someone calls an algorithm “biased”, they need to define what they mean, and propose an acceptance criteria.

Existential risk of misaligned AI

The basic premise here is some variant of the paperclip maximizer story: at some point, AI will be powerful enough to end the world, someone will ask it to fetch them coffee, and the AI will blithely destroy the observable universe while making the coffee.

The paperclip maximizer was conceived Nick Bostrom, who is a philosopher. It was popularized by Eliezer Yudkowsky, who is a blogger. Neither of these two write code or build machine learning based systems. One of them dropped out of high school and wrote a biography about himself at age 20.

This is to say: world ending AI thought experiments are detached from the business of making AI systems.

How could an AI even grow powerful?

Machine learning algorithms learn by making a lot of mistakes very very fast. This is the core concept to all machine learning. The bitter lesson from 40 years of AI research is that nothing matters in the long run except throwing more computing power at problems.

Things like neural networks and transformer architectures only lead to breakthroughs because they let us throw even more computing power at the same problems.

The reason DeepNets and AI had a renaissance around AlexNet in 2012 was that some guy found how to use GPUs to make the algorithm run through more images per second. Similarly, the only reason transformer architectures led to an explosion in language model size is that they can be used to throw more computing power more efficiently at the same data.

A LLM has been trained by reading everything on the internet. No one can read 45TB of text in a lifetime, the LLM does it over a few weeks of training time.

Similarly, I’ve played poker professionally for 5 year. I’ve played something like half a million hands of online poker. Modern poker algorithms are better than humans, and the core reason is that the algorithm has played several orders of magnitude more poker than I have, or ever will be able to.

If an AI ends the world, it’ll have nearly ended the world a million times already

There’s no huge magical jump in ability for models. People talk about “emergent abilities” in LLMs but during training time, the LLM has tried and failed at these emergent abilities millions of times. The trick is that the LLM can try and fail very fast, because it’s only reading text, generating text, and comparing what it generated to what it read.

The only way for an AI to destroy the world is by nearly doing that action many, many times beforehand. There’s no surprise to be had here.

I can kick my cell phone’s ass

People seem to forget physical realities constrain computers. If my superintelligent cellphone wanted to kill me, the best it could do is make irritating noises and vibrate aggressively [footnote]I, on the other hand, throw things decently well[/footnote].

This fact explains why machine learning quickly surpassed humans at games like Chess, Go or Poker, but develops much slower at driving a car, folding clothes, or dancing, apparently.

You can apply the “try-fail-adapt” scheme thousands of times per second when playing chess on a computer. If you’re required you to physically drive a car, the feedback loop that teaches the algorithm is much slower.

AI won’t magically hack stuff

Since my superintelligent cellphone can’t physically murder me, it will need to use its communication capacities to try and convince someone or something else to do the job.

This is the leap of logic most “existential AI Risk” scenarios really rely on: the AI has somehow learned to hack anything at will, like the villain in a bad TV Series.

There’s one caveat here: modern AI is absolutely a risk for social engineering attacks. We already see it with fake kidnapping ransoms that use emulated voices from deepfake-style techniques.

But people will adapt to AI-based impersonation attacks. This isn’t even a new type of attack, it’s just making an existing type of phishing more accessible. Annoyances like multifactor authentification will become more commonplace.

On the other hand, AI won’t magically learn to perform remote code execution attacks on arbitrary computers as people imagine in fiction. The web is already too an hostile environment for that to be possible!

Systems connected to the internet are already being attacked by everything all the time. Try browsing the web on windows XP, and see how long until your machine gets recruited into a botnet.

There isn’t much for a neural network to do that’s particularly new here. Computer security attacks are already well automated, and leverage databases of all known exploitable systems. Machine learning-like techniques like fuzzing variants that mutate to exploit security holes have existed forever.

It would be a nice research project to engineer an objective function and learning environment that allows a model to learn to hack into computer systems from no knowledge. It’s a tough problem - for a machine learning model to learn a task, it needs a gradient between failure and success, something that’s difficult to find (or even define) in the computer security world. But even if such a project succeeded, it would effectively just add new exploits to databases until things stabilize again.

Longtermism is nonsense

Ideas like “AI will end the world” are one of the offshoots of a popular ideology called longtermism. Other hits from this have been Effective Altruism, as made famous by Sam Bankman-Fried.

The problem with longtermism is simple: if you make the timeline long enough, you can justify any stupid opinion. Because no one knows what’s in the future. So you end up justifying buying an island. Not to avoid taxes mind you, to restart humanity if there’s a global catastrophe. The tax avoidance is just a nice side benefit.

The issue here is that while there’s a good side to ideas like effective altruism, like the part that popularized GiveWell, the doomsday cult side is the one raking in donations, and distracting efforts to solve real problems that are right there.

TL;DR - Don’t be concerned about hypothetical future unemployability of humans. Instead, be concerned about an ever increasing gap between well paying jobs and poorly paying jobs. Also, be on the lookout for one-time labor market disruptions that cause large scale unemployability in the 3-10 year term, but that people don’t adapt to (see: China Shock in the article). ↩︎